Big Data Compute Workload Migration, Cloud Data Management & Automated Disaster Recovery for Enterprise.

MLens is a one-stop solution for all your Big Data needs, from Automated Disaster Recovery to Compute Workload Migration, and everything in between.

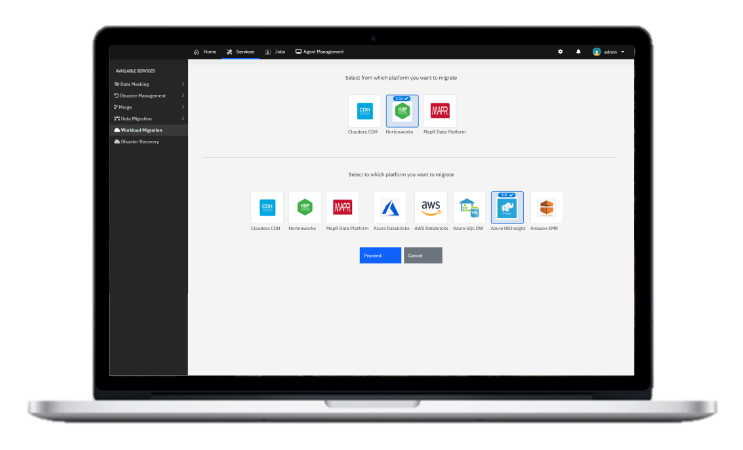

Workload Migration from On-Premise Hadoop Distributions to Databricks

Cloud or Azure HDInsight.

Workload Migration from On-Premise Hadoop Distributions to Databricks

Cloud or Azure HDInsight.

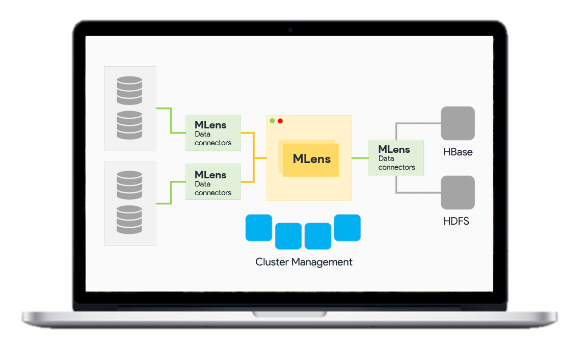

Identifies changes to source tables/directories in real time

Identifies changes to source tables/directories in real time

.png)

.png)